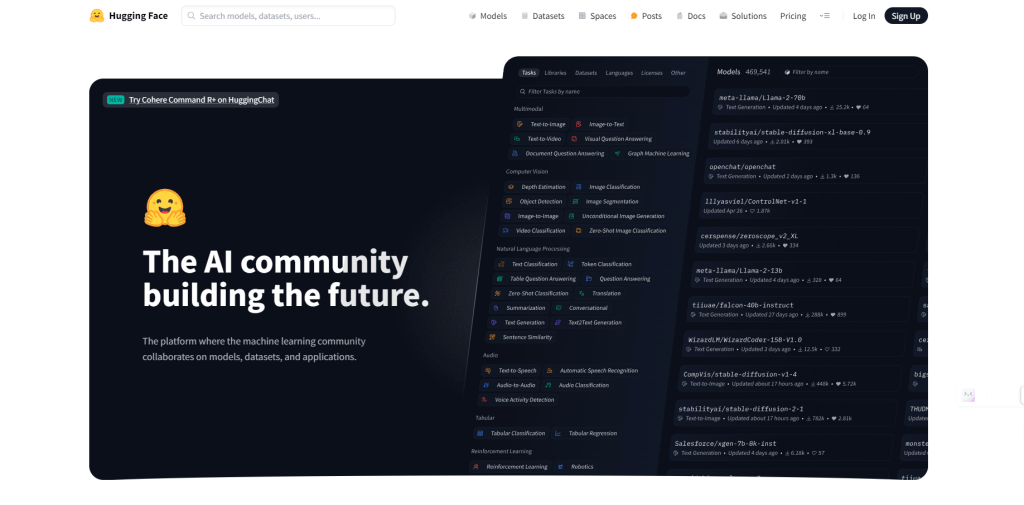

Hugging Face is a goldmine for anyone into natural language processing, packed with a variety of pre-trained language models that are super easy to use in different applications. When it comes to Large Language Models (LLMs), Hugging Face is the top choice. In this piece, we'll dive into the top 10 LLMs on Hugging Face, each playing a pivotal role in advancing how we understand and generate language.

Let's get started!

What is the Large Language Model?

Large Language Models (LLMs) are advanced types of artificial intelligence designed to understand and generate human language. They are built using deep learning techniques, particularly a kind of neural network called a transformer.

Here's a breakdown to make it clear:

- Training on Massive Data: LLMs are trained on huge datasets that include books, articles, websites, and more. This extensive training helps them learn the nuances of language, including grammar, context, and even some level of reasoning.

- Transformers: The architecture behind most LLMs is called a transformer. This model uses attention mechanisms to weigh the importance of different words in a sentence, allowing it to understand context better than previous models.

- Tasks They Perform: Once trained, LLMs can perform various language tasks. These include answering questions, summarizing texts, translating languages, generating creative writing, and coding.

- Popular Models: Some well-known LLMs include GPT-3, BERT, and T5. These pre-trained models can be fine-tuned for specific tasks, making them versatile tools for developers and researchers.

- Applications: LLMs are used in chatbots, virtual assistants, automated content creation, and much more. They help improve user interactions with technology by making machines understand and respond to human language more naturally.

In essence, Large Language Models are like supercharged brains for computers, enabling them to handle and generate human language with impressive accuracy and versatility.

HuggingFace & LLM

Hugging Face is a company and a platform that's become a hub for natural language processing (NLP) and machine learning. They provide tools, libraries, and resources to make it easier for developers and researchers to build and use machine learning models, especially those related to language understanding and generation.

Hugging Face is known for its open-source libraries, especially Transformers, which provide easy access to a wide range of pre-trained language models.

Hugging Face hosts many state-of-the-art LLMs like GPT-3, BERT, and T5. These models are pre-trained on massive datasets and are ready to be used for various applications.

The platform provides simple APIs and tools for integrating these models into applications without requiring deep expertise in machine learning.

Using Hugging Face's tools, you can easily fine-tune these pre-trained LLMs on your own data, allowing you to adapt them to specific tasks or domains.

Researchers and developers can share their models and enhancements on the Hugging Face platform, accelerating innovation and application in NLP.

Top 5 LLM Models On Huggingface You Should Use

Let's explore some of the top LLM models on Hugging Face that excel at storytelling and even surpass GPT.

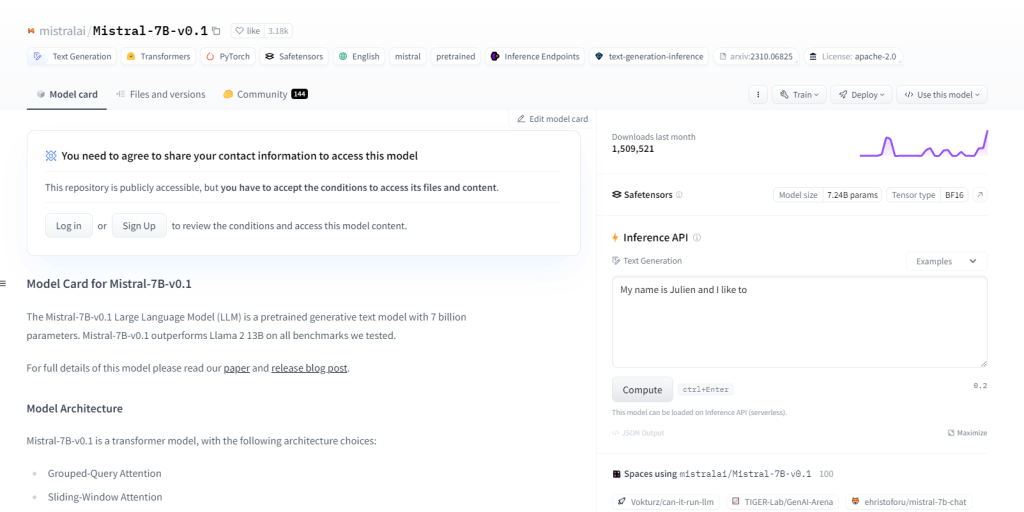

Mistral-7B-v0.1

The Mistral-7B-v0.1, a Large Language Model (LLM) with 7 billion parameters, outperforms benchmarks like Llama 2 13B across domains. It utilizes transformer architecture with specific attention mechanisms and a Byte-fallback BPE tokenizer. It excels in text generation, natural language understanding, language translation, and serves as a base model for research and development in NLP projects.

Key Features

- 7 billion parameters

- Surpasses benchmarks like Llama 213B

- Transformer architecture

- BPE tokenizer

- NLP Project Development

- Natural language understanding

- Language Translation

- Grouped-Query Attention

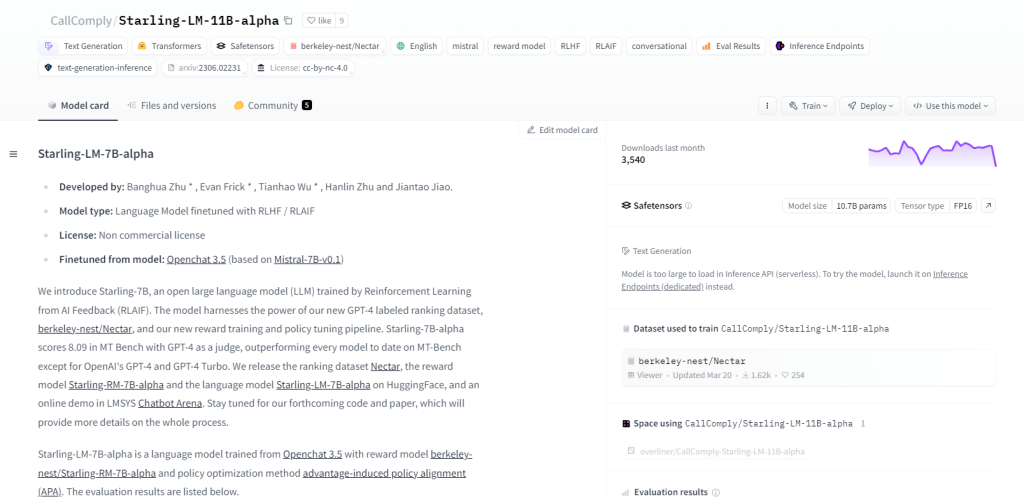

Starling-LM-11B-alpha

Starling-LM-11B-alpha, a large language model (LLM) with 11 billion parameters, emerges from NurtureAI, leveraging the OpenChat 3.5 model as its base. Fine-tuning is achieved through Reinforcement Learning from AI Feedback (RLAIF), guided by human-labeled rankings. This model promises to reshape human-machine interaction with its open-source framework and versatile applications, including NLP tasks, machine learning research, education, and creative content generation.

Key Features

- 11 billion parameters

- Developed by NurtureAI

- Based on the OpenChat 3.5 model

- Fine-tuned through RLAIF

- Human-labeled Rankings for training

- Open-source nature

- Diverse capabilities

- Use for research, education, and creative content generation

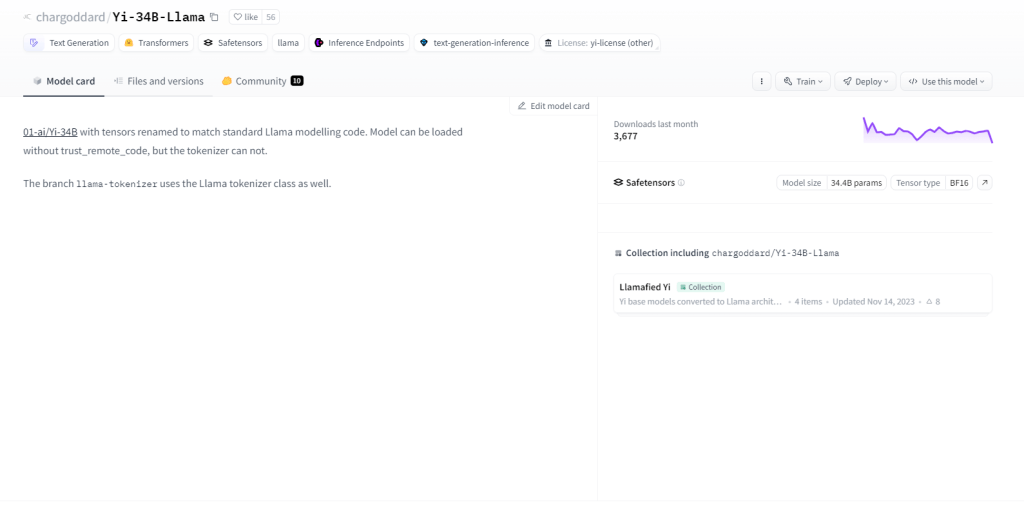

Yi-34B-Llama

Yi-34B-Llama, with its 34 billion parameters, showcases superior learning capacity. It excels in multi-modal processing, handling text, code, and images efficiently. Embracing zero-shot learning, it adapts to new tasks seamlessly. Its stateful nature enables it to remember past interactions, enhancing user engagement. Use cases include text generation, machine translation, question answering, dialogue, code generation, and image captioning.

Key Features

- 34 billion parameters

- Multi-modal processing

- Zero-shot learning capability

- Stateful nature

- Text generation

- Machine translation

- Question answering

- Image captioning

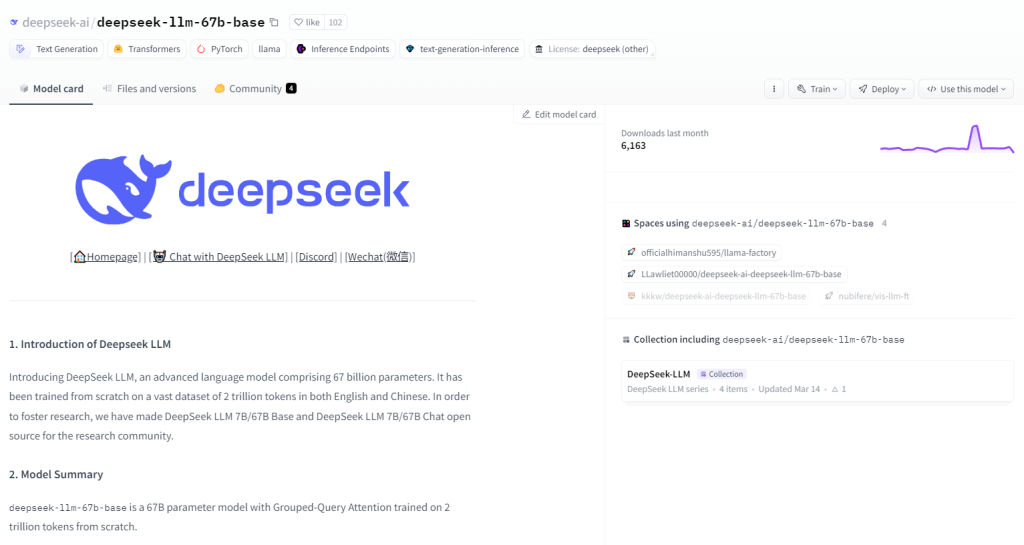

DeepSeek LLM 67B Base

DeepSeek LLM 67B Base, a 67-billion parameter large language model (LLM), shines in reasoning, coding, and math tasks. With exceptional scores surpassing GPT-3.5 and Llama2 70B Base, it excels in code understanding and generation and demonstrates remarkable math skills. Its open-source nature under the MIT license enables free exploration. Use cases span programming, education, research, content creation, translation, and question-answering.

Key Features

- 67-billion parameter

- Exceptional performance in reasoning, coding, and mathematics

- HumanEval Pass@1 score of 73.78

- Outstanding code understanding and generation

- High scores on GSM8K 0-shot (84.1)

- Surpasses GPT-3.5 in language capabilities

- Open source under the MIT license

- Excellent storytelling & content creation capability.

Skote - Svelte Admin & Dashboard Template

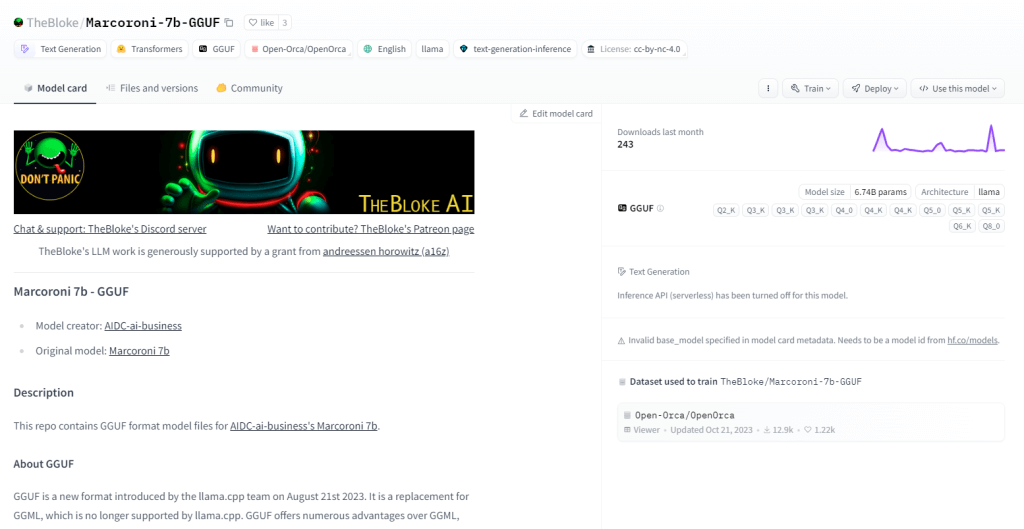

Marcoroni-7B-v3 is a powerful 7-billion parameter multilingual generative model capable of diverse tasks, including text generation, language translation, creative content creation, and question answering. It excels in processing both text and code, leveraging zero-shot learning for rapid task performance without prior training. Open-source and under a permissive license, Marcoroni-7B-v3 facilitates wide usage and experimentation.

Key Features

- Text generation for poems, code, scripts, emails, and more.

- High-accuracy machine translation.

- Creation of engaging chatbots with natural conversations.

- Code generation from natural language descriptions.

- Comprehensive question-answering capabilities.

- Summarization of lengthy texts into concise summaries.

- Effective paraphrasing while preserving the original meaning.

- Sentiment analysis for textual content.

Wrapping Up

Hugging Face’s collection of large language models is a game-changer for developers, researchers, and enthusiasts alike. These models play a big role in pushing the boundaries of natural language understanding and generation, thanks to their diverse architectures and capabilities. As technology evolves, the applications and impact of these models are endless. The journey of exploring and innovating with Large Language Models is ongoing, promising exciting developments ahead.