As an AI enthusiast, I've witnessed the incredible rise of large language models (LLMs) firsthand. These powerful AI tools have revolutionized how we interact with technology, sparking dilemmas for businesses and individuals alike.

Should we embrace the convenience of subscription services or take control by hosting our models? This question isn't just about cost—it touches on performance, privacy, and scalability.

In this blog post, I'll explore both options, drawing on my experience and the latest industry trends to help you make an informed decision that aligns with your specific needs and resources.

LLM Hosting vs ChatGPT Subscription: Understanding the Options

Let's break down our two leading contenders in the LLM arena.

Create Amazing Websites

With the best free page builder Elementor

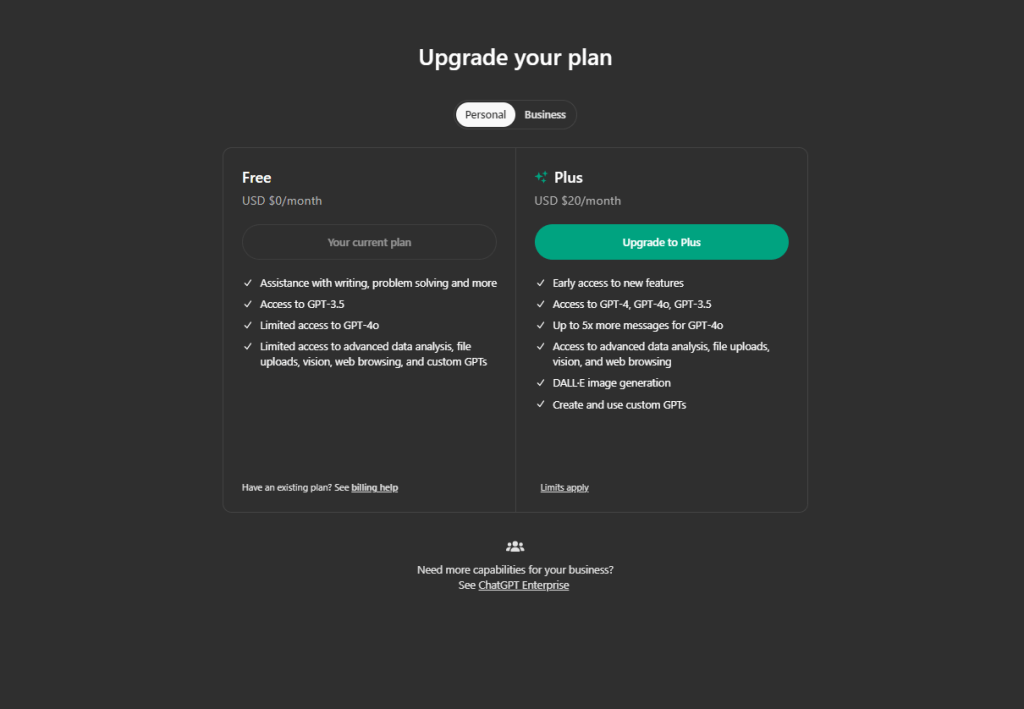

Start NowChatGPT Subscription

ChatGPT has become a household name for good reason. As a subscriber, you get:

- Access to state-of-the-art language models

- Regular updates and improvements

- A user-friendly interface

- Robust API for integration into various applications

The pricing is straightforward: you pay based on your usage. For casual users or small businesses, this can be pretty cost-effective.

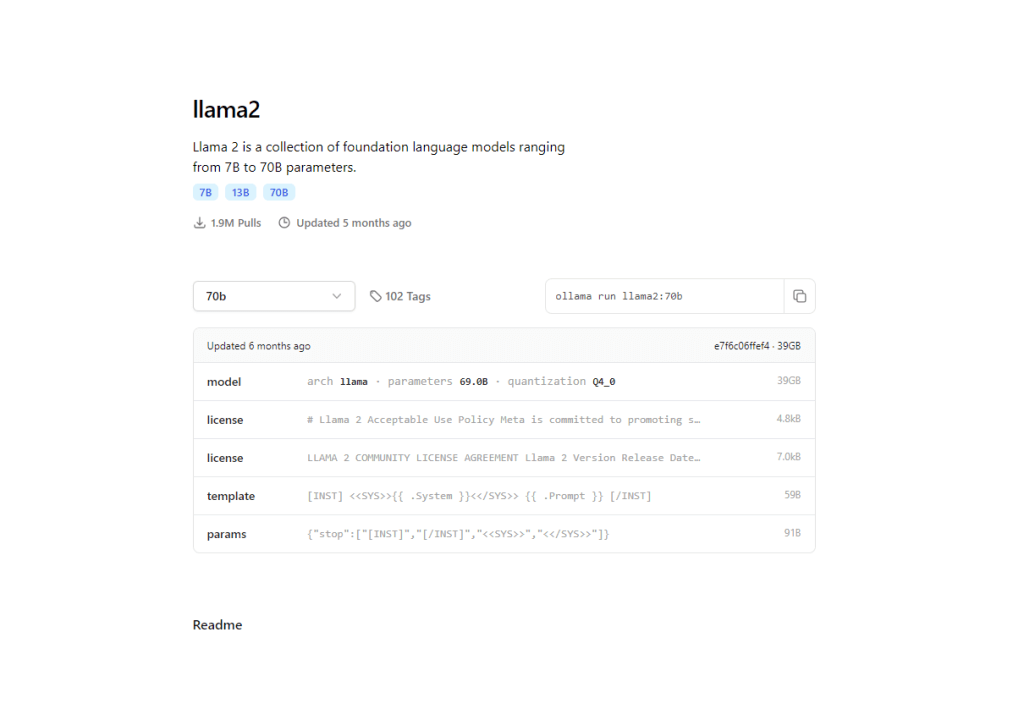

Self-Hosting Open-Source LLMs

On the flip side, we have the DIY approach. Popular open-source models like LLaMA and GPT-NeoX offer:

- Full control over your model

- Customization options

- Data privacy

- Potential for cost savings at scale

You've got two main deployment options here:

a) Cloud hosting (AWS, Google Cloud, Azure)

b) On-premises hardware

Each path has its pros and cons, which we'll explore in-depth as we go on.

The choice between these options isn't always clear-cut. It depends on your specific needs, resources, and long-term goals. In the following sections, I'll guide you through the key factors to consider, helping you make the best decision for your unique situation.

Cost Comparison

When it comes to LLMs, cost is often a major deciding factor. Let's break down the numbers.

ChatGPT Subscription

if we use ChatGPT, the subscription will cost:

- Currently, OpenAI charges $0.002 per 1K tokens.

- For perspective, 1K tokens are roughly 750 words.

- A typical day with 5000 queries might cost around $6.5.

- That's about $200 per month for moderate usage.

Sounds reasonable, right? But here's the catch: costs can skyrocket with heavy usage. Your monthly bill could quickly become eye-watering if you're handling thousands of queries daily.

Self-Hosting Open Source LLMs

If you want to make your own self-hosted LLM, it will cost:

- Hardware: A high-end GPU like an NVIDIA RTX 3090 costs around $700.

- Cloud hosting: AWS can run about $150-$160 daily for 1M requests.

- Don't forget electricity: At $0.12/kWh, running a powerful GPU 24/7 adds up.

- Labor costs: You'll need expertise to set up and maintain your system.

Initial setup for self-hosting is pricier, but it can be more cost-effective for high-volume usage over time.

Hidden Costs to Consider:

- Time: Setting up a self-hosted system isn't instant.

- Upgrades: Technology evolves fast in the AI world.

- Downtime: Self-hosted systems might face more interruptions.

The Verdict: ChatGPT's subscription model often wins in terms of cost for low-volume users. But if you're looking at millions of monthly queries, self-hosting could save you money in the long run.

Performance and Quality

When it comes to LLMs, performance is king. Let's dive into how ChatGPT and open-source models stack up:

ChatGPT's Capabilities

- Consistently high-quality responses

- Broad knowledge base covering diverse topics

- Regular updates improving performance

- Ability to handle complex queries and tasks

I've found ChatGPT particularly impressive in creative writing and problem-solving scenarios. Its responses often feel human-like and contextually appropriate.

Open-Source LLM Performance

- Models like LLaMA 2 are catching up fast

- Some specialized models outperform ChatGPT in specific domains

- Customization potential for task-specific optimization

In my experience, recent open-source models like LLaMA 2 70B can match or even exceed GPT-3.5 in specific tasks. The gap is narrowing rapidly.

This is where open-source models shine. With self-hosting, you can:

- Train on domain-specific data

- Optimize for particular tasks

- Potentially outperform generic models in niche applications

Businesses have achieved remarkable results by fine-tuning open-source models to their specific needs.

Now, if we consider the Performance,

- Latency: Self-hosted models might offer lower response times

- Customization: Tailor open-source models to your exact requirements

- Consistency: ChatGPT ensures reliable performance across various tasks

The Verdict: ChatGPT offers top-tier performance out of the box. However, if you have specific, specialized needs, a fine-tuned open-source model could potentially outperform it in your particular use case.

Data Privacy and Control

Nowadays, privacy isn't just a luxury—it's a necessity. Let's examine how ChatGPT and self-hosted LLMs handle this crucial aspect:

ChatGPT's Data Handling

- OpenAI has strict privacy policies

- Data sent to ChatGPT may be used for model improvement

- Option to opt out of data sharing, but with potential performance trade-offs

While OpenAI is committed to user privacy, sending sensitive data to a third party always carries some risk. I've seen businesses hesitate to use ChatGPT for confidential information.

Self-Hosting LLM Benefits

- Complete control over your data

- No risk of external data exposure

- Ability to implement custom security measures

In my experience, this level of control is a game-changer for industries like healthcare or finance, where data privacy is paramount.

I've worked with companies that chose self-hosting specifically to meet stringent compliance requirements.

Key Privacy Factors:

- Data Ownership: With self-hosting, you retain full ownership of your data and model

- Transparency: Open-source models allow you to inspect and understand the code

- Customization: Implement privacy features tailored to your specific needs

The Verdict: If data privacy is your top priority, self-hosting an open-source LLM gives you unparalleled control. However, ChatGPT's robust privacy measures may be sufficient for less sensitive applications.

Scalability and Flexibility

Let's explore how ChatGPT and self-hosted LLMs measure up in terms of scalability and flexibility.

ChatGPT's Scalability Options

- Seamless scaling with increased usage

- No infrastructure management required

- API allows for easy integration into various applications

If we talk about customization potentiality, ChatGPT is limited to API parameters and prompt engineering.

I've seen businesses rapidly scale their AI capabilities using ChatGPT without worrying about backend logistics. It's impressively hassle-free.

Self-Hosted LLM Flexibility

- Complete control over model size and capabilities

- Ability to scale horizontally across multiple machines

- Freedom to optimize for specific hardware configurations

For future customization, you'll have full access to model architecture and you can train data on an advanced level.

In my projects, this level of control has been invaluable for fine-tuning performance and cost efficiency.

The Verdict: ChatGPT excels in effortless scalability, making it ideal for businesses with fluctuating demands. However, self-hosting offers unparalleled flexibility for those willing to manage their own infrastructure.

Technical Considerations

Since I have been working with AI for a long time, I can tell you that the technical aspects are crucial. Let's break down what you need to know.

For ChatGPT, you need a minimal setup, and OpenAI already manages the rest of the thing.

To self-host an LLM model, you need to understand machine learning and natural language processing deeply. It would be best to have proficiency in cloud infrastructure or non-premises hardware management. Moreover, you'll also need vast knowledge in fine-tuning and optimization.

Many people underestimate the expertise needed, leading to setbacks. It's not just about downloading a model—it's about effectively deploying and maintaining it.

Key Technical Factors:

- Latency: Self-hosted models can offer lower response times if optimized correctly

- Customization: Full control over model architecture and training data

- Debugging: Easier to diagnose and fix issues with self-hosted models

The Verdict: ChatGPT is the clear winner for those seeking a plug-and-play solution. However, if you have the technical expertise and desire for full control, self-hosting can offer unparalleled customization and potential performance benefits.

Use Case Analysis

As an AI professional, I've seen various scenarios where different LLM solutions shine. Let's explore some real-world use cases to guide your decision.

ChatGPT Subscription

Small to Medium Businesses often benefit from ChatGPT's ease of use. With limited technical resources and a need for quick deployment, I've watched startups rapidly prototype AI features, saving months of development time.

Content Creation and Marketing teams thrive with ChatGPT's diverse language capabilities. A marketing agency I worked with used it to brainstorm campaign ideas and draft social media posts, significantly boosting their productivity.

Customer Support Automation is another area in which ChatGPT excels. Its 24/7 availability and ability to handle general inquiries make it a go-to choice for many businesses I've advised.

Self-Hosting LLMs

Large enterprises with high volumes often find self-hosting more economical. I helped an e-commerce platform switch to a self-hosted model, cutting its AI costs by 60% over a year.

Highly Regulated Industries benefit from the control self-hosting offers. A fintech company I advised chose this route to ensure GDPR compliance and protect sensitive financial data.

Specialized Domain Applications often require custom models. I worked with a legal tech startup that fine-tuned an open-source model on legal documents, outperforming generic models in contract analysis.

Key factors - include query volume, data sensitivity, technical resources, and customization needs. Higher volumes and more sensitive data often justify self-hosting, while limited resources favor ChatGPT.

Conclusion

After diving deep into the world of LLMs, it's clear that the choice between ChatGPT and self-hosted models isn't black and white. As an AI enthusiast and professional, I've seen successful implementations of both approaches.

ChatGPT shines in its ease of use, consistent performance, and regular updates. It's an excellent choice for businesses looking for a quick, hassle-free AI solution. The subscription model works well for moderate usage and general applications.

Self-hosting, on the other hand, offers unparalleled control, customization potential, and data privacy. It's ideal for high-volume users, specialized applications, and industries with strict data regulations. The initial setup might be challenging, but the long-term benefits can be substantial.

Remember, this isn't a permanent choice. As your needs evolve and the AI landscape shifts, you can always reassess and switch approaches.

Ultimately, the best choice is the one that aligns with your goals, resources, and vision for AI integration in your workflow. Whether you opt for the convenience of ChatGPT or the control of self-hosting, you're stepping into an exciting world of AI-powered possibilities.