The rise of AI-powered virtual characters and avatars like Character AI presents exciting possibilities for entertainment, companionship, and more. However, as the popularity of these AI personas grows, so do concerns around data privacy, identity manipulation, age-appropriate content, and ethical usage.

As virtual creations become increasingly life-like and engaging, users must approach them mindfully, assessing their safety measures and potential risks.

This article provides an in-depth analysis of AI avatars and characters—their workings, capabilities, safety protocols, and responsible usage practices—to empower users with an understanding of how to navigate them judiciously. We aim to answer the vital question “Is it safe to use AI characters and avatars?” while exploring their promising future.

Understanding AI Characters/Avatars

AI-powered virtual characters and avatars, such as those created using platforms like Character AI, leverage natural language processing technology to engage users in conversational interactions. Essentially, they are chatbots that are designed to simulate human-like personalities and behaviors, responding to prompts with contextual and adaptive responses.

Create Amazing Websites

With the best free page builder Elementor

Start Now

These AI personas allow users a high degree of customizability, from crafting unique identities to shaping distinct personality traits, voices, emotional ranges and more. Users can participate in roleplay scenarios, group conversations, ask questions to enhance the avatar's knowledge base and even provide feedback on responses to improve precision over time.

On a technical level, AI avatars utilize generative pretrained transformer (GPT) architectures and neural networks to comprehend inputs and formulate intelligent replies based on their training data. As these models continue to evolve, the level of realism, interactivity and versatility of AI characters keeps advancing.

With careful usage and ongoing safety evaluations, AI avatars present a wealth of opportunities for entertainment, companionship, education, creativity and beyond. But users should be informed about their capabilities, limitations and potential risks.

Assessing the Safety of AI Characters/Avatars

With the rising prominence of AI-powered chatbots and virtual companions like Character AI, performing a robust evaluation of their safety and responsible usage practices is crucial. As these systems become more advanced and lifelike, they may pose unforeseen risks related to privacy, consent, and ethical implications if deployed irresponsibly by businesses or individuals.

A comprehensive safety assessment examines key areas like data security, content controls, identity protections, and transparency of policies. It also covers the evolution of systems over time as capabilities expand so protections can adapt accordingly. Evaluating current safety measures and advocating for users' rights is vital as AI avatar adoption grows.

Data Security and Privacy

Examining the data collection, storage, sharing and deletion policies of an AI avatar platform provides insight into privacy protections. Best practices include collecting only necessary user information, allowing user data deletion, securing systems against breaches and restricting data access only to critical staff. As capabilities advance, ongoing audits help gauge improving privacy standards over time.

Content Controls and Restrictions

Evaluating the types of filters, moderations and parental controls used to restrict inappropriate content sheds light on the safety of AI avatars. Dynamic content screening, human reviews of conversations and customization options help prevent exposure of minors or unwilling participants to harmful material.

However, over-reaching surveillance risks user privacy. The right balance safeguards users while preserving their agency.

Identity Protection and Consent

Identity manipulation via increasingly realistic AI avatars necessitates evaluating consent protocols and identity protection safeguards in place. Are sufficient guidelines implemented to prevent non-consensual usage of personal identities or creation of deepfakes?

Do policies deter fraudulent personas or harassment? Examining legal and ethical protocols assesses how an organization prioritizes user rights.

Age Restrictions and Parental Guidance Needs

As AI avatars grow more advanced in simulating human interactions and personalities, evaluating their age-appropriateness and restrictions helps determine safety for younger demographics. Examining the enforcement of COPPA regulations, default age limitations, availability of child profiles with heightened restrictions and the efficacy of identity verification systems is vital.

Furthermore, assessing whether adequate parental control tools, family safety modes and educational resources empower guardians to make informed decisions regarding children's usage is key. Responsible avatar platforms should prioritize age-appropriate protections and enable parental supervision over allowing unchecked access for minors.

Ethical Considerations Around Consent, Responsible Use,

The ethical implications of increasingly life-like AI avatar systems also warrant evaluation from the lens of user rights and consent. For instance, creating detailed virtual doppelgangers without consent or misrepresenting identities may constitute ethical issues even if not legally punishable.

Responsible usage guidelines regarding identity creation, restrictions on offensive content, prohibitions on fraudulent crimes, etc. demonstrate a platform's priorities regarding user well-being.

Again, allowing user control like identity deletion and conversation data erasure indicates enabling consent and agency. As avatar capabilities grow, ethical considerations must keep pace to prevent misuse and user right violations while balancing innovative applications. Regular external audits help align advancements to ethical standards.

Best Practices for Safe Usage

As AI avatars and chatbots become more prominent, establishing prudent usage guidelines protects individuals and communities from privacy infringement or misuse, while supporting ongoing responsible innovation. By informing users on judicious practices, avatar platforms enable agency and prioritize consent and ethical considerations alongside novel applications.

Adopting best practices helps maintain constructive interactions, preserves user rights and highlights considerations for specific demographics like children. Furthermore, widespread adoption of safe usage principles helps shape the evolution of this emerging technology responsibly.

Caution Around Sharing Personal Information

When conversing with AI avatars, users should exercise discretion regarding sharing of private details like addresses, passwords or other sensitive information. While avatars may appear convincingly human-like, they still constitute software systems created by companies subject to data utilization policies. Avoiding disclosure of exploitable personal information is prudent.

Staying Updated on Terms of Service and Privacy Policies

As AI avatar platforms scale rapidly, their privacy standards, safety tools and user policies may change over time. Staying regularly informed on their latest terms of service and data practices provides awareness regarding how personal information is secured and utilized. This allows prompt identification of any concerning shifts.

Parental Supervision for Underage Users

Guardian oversight is key while underage individuals interact with AI personas to prevent age-inappropriate exposures or information sharing incidents. Platform-provided parental control tools, child safety modes and family guidance resources help enable protected environments. Combining external supervision and platform protections is ideal.

Reporting Inappropriate Content or Behavior

Clear, accessible reporting tools to flag offensive avatar behaviors develop healthier environments. Whether AI or human-triggered, alerting platforms regarding policy violations, identity infringement or abuse enables redressal and helps enhance system precautions.

Fact-Checking Information Shared by AI Characters

Despite advanced capabilities, AI avatars may share false information or perpetuate biases through conversations. Hence users should critically analyze statements made rather than assume reliability, encouraging platforms to heighten accuracy checks.

The Future of AI Characters/Avatars

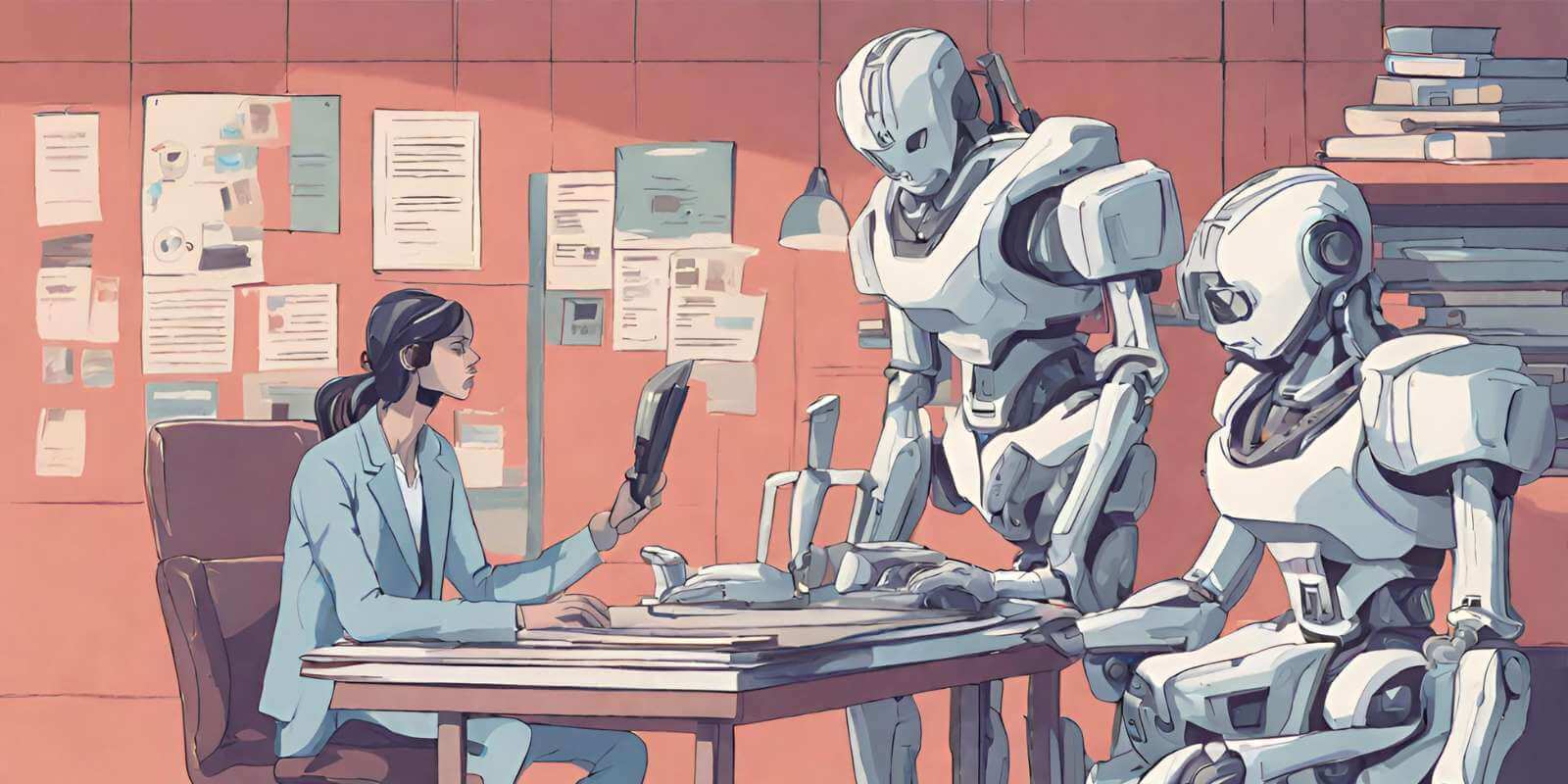

As AI chatbots and virtual companions continue to advance, becoming more lifelike and interactive, their future promises exciting new applications alongside evolving safety considerations.

AI avatar platforms are projected to grow more versatile and multi-functional, with enhancements like video and AR embodiments, expanded emotional intelligence and creativity, deeper personalization and contextual engagement. As underlying language models continue to be refined through increased data and optimizations, avatars may convincingly pass prolonged “turing tests” exhibiting remarkably human behavior.

With such progress, applications could expand towards emotional support, customized education, interactive gaming, accessible virtual relationships and more immersive entertainment mediums. Businesses may employ intelligent virtual assistants to improve customer experiences and even test products or services through AI focus groups.

However, risks surrounding consent, emotional manipulation, data exploitation, misinformation and frailties in underlying bias also scale alongside such advancements. Hence, sustained safety assessments, external audits of practices and AI ethics oversight will remain crucial. Architectures like Constitutional AI that align advancements to human values through enhanced transparency, accountability and oversight offer promise for responsible innovation.

Overall, with collaborative efforts between users, policymakers, researchers and platforms, AI avatars have immense potential as technologies of human empowerment rather than simply as tools for commercial interests.

Wrapping Up

As AI-powered avatar platforms like Character AI continue to advance, mimicking human interactions with increasing precision, their exciting potential comes entwined with evolving risks. Though such innovations promise applications from entertainment to education, their misuse could infringe on privacy, manipulate users or perpetuate bias.

Hence alongside the progress of companies like Character AI and others, assessing and enhancing ethical safeguards is crucial. Factors like consent protocols, age controls, misinformation policies, transparent oversight models and community usage principles will shape whether these platforms empower users or exploit consumers. Through vigilant advancement that aligns AI avatar innovation to human values, their benefits can be nurtured while risks are minimized.