As the world becomes increasingly digital, the demand for high-quality content has never been greater. Fortunately, advancements in natural language processing (NLP) have given rise to powerful AI-driven writing tools, such as those developed by Hugging Face. But what if you need to utilize these models offline? Fear not, as we'll explore how to leverage Hugging Face models without an internet connection.

Offline access to Hugging Face's cutting-edge language models opens up a world of possibilities. Whether you're working in remote locations, dealing with unreliable internet connectivity, or simply prioritizing data privacy, the ability to run these models locally can be a game-changer. By understanding how to deploy Hugging Face models offline, you can unlock new levels of efficiency, flexibility, and control in your content creation process.

In this blog post, we'll dive into the practical steps you can take to harness the power of Hugging Face models offline. From model deployment to seamless integration with your existing workflows, you'll learn how to maximize the potential of these advanced NLP tools, even when an internet connection is not readily available. Prepare to elevate your content creation game and stay ahead of the curve in the ever-evolving world of digital marketing and communication.

Why You Might Want To Use Huggingface Model Offline?

You might wonder why anyone would bother running AI models offline. But trust me, there are some seriously compelling reasons to take your Hugging Face models off the grid.

Create Amazing Websites

With the best free page builder Elementor

Start NowHere's a concise explanation of why someone might want to use Hugging Face models offline:

Using Hugging Face models offline offers several key advantages:

- Reliability: Work uninterrupted without worrying about internet connectivity issues.

- Speed: Eliminate network latency for faster model inference.

- Privacy: Keep sensitive data local, avoiding potential security risks of online processing.

- Cost-efficiency: Reduce cloud computing expenses by running models on your hardware.

- Portability: Use advanced AI capabilities in remote locations or areas with limited internet access.

- Learning: Gain a deeper understanding of model architecture and functioning by working with them directly.

- Customization: More easily fine-tune and adapt models for specific use cases when you have local access.

Let's dive in and explore how to harness the full potential of Hugging Face models, anytime and anywhere.

How To Use Huggingface Models Offline?

Huggingface is like a Github or app store for open-source AI. here, you can browse, download, and even use numerous open-source LLMs from this website. Moreover, just like ChatGPT, they have Hugging Chat. This chatbot allows you to pick a model you want to use and later you can make conversation with this as you would do with any other multi-model AI assistant.

How awesome it would be to use these models completely free, offline daily? Let's do this.

LM Studio

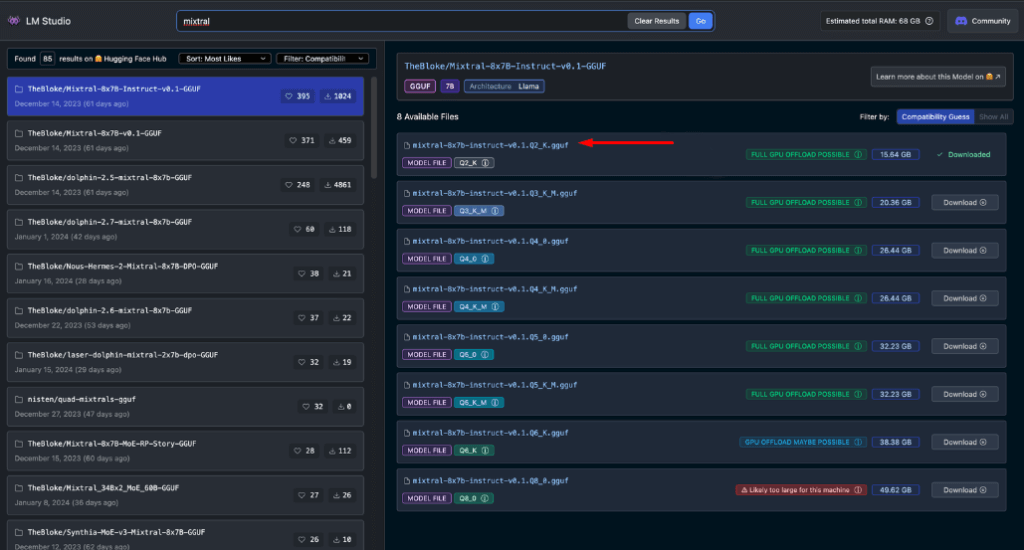

LM Studio is revolutionizing the way we use AI models locally. This versatile app, available for Windows, Mac (Apple Silicon), and Linux (beta), empowers users to download and run Hugging Face models offline with ease.

Key features of LM Studio include:

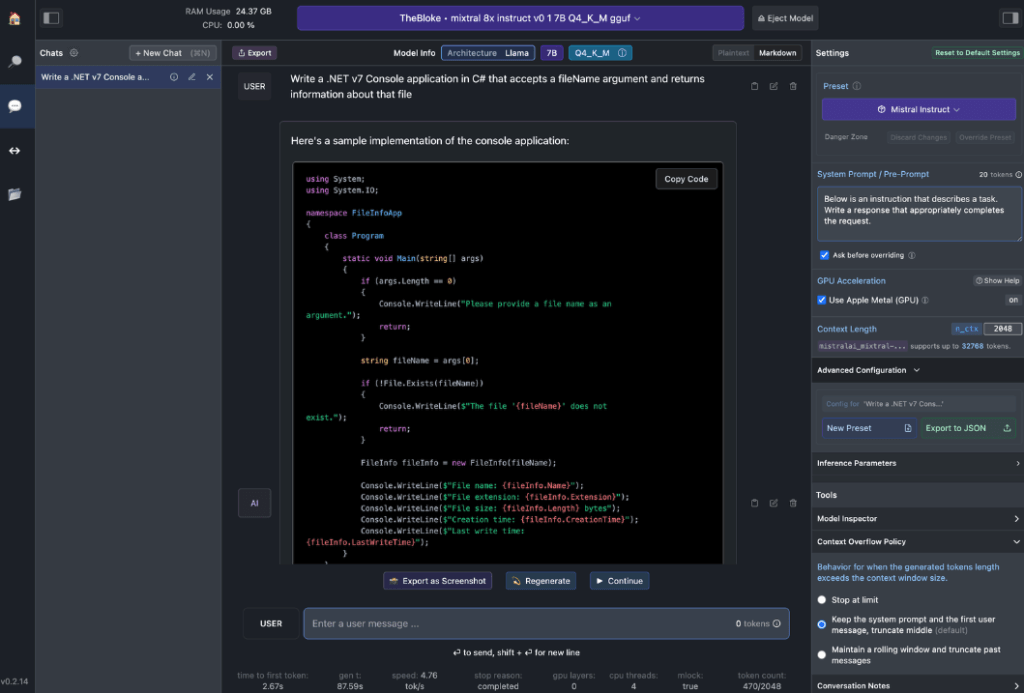

- Local Model Interaction: Download your preferred Language Model (LLM) and engage with it as you would any chat-based AI assistant. Customize performance, and output format, and explore experimental features through various built-in system prompts and options.

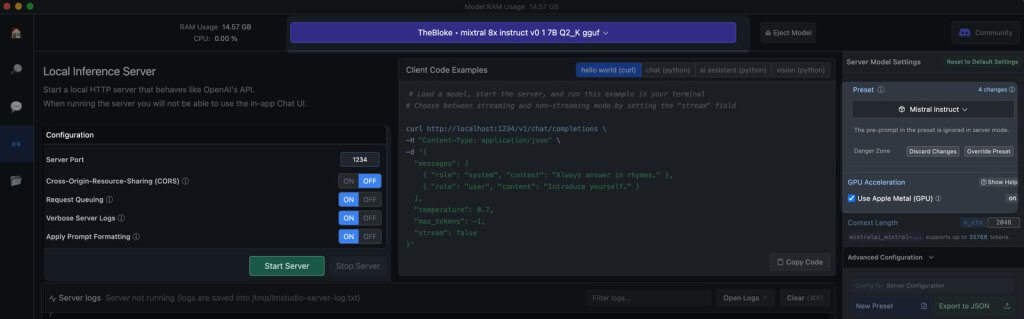

- OpenAI API Emulation: This is where LM Studio truly shines. Load your downloaded Hugging Face model, and LM Studio ingeniously wraps a local API proxy around it, mimicking the OpenAI API. This compatibility is a game-changer, as it allows seamless integration with numerous SDKs and popular Editor & IDE extensions that already support OpenAI.

Workstation Hardware Requirements

To truly unlock the potential of offline Hugging Face models, you'll need some robust hardware. While any modern computer can handle basic tasks, optimal performance requires more powerful specs:

For Apple users:

- M2/M3 Max or Ultra processors

- Higher-end configurations

- 50+ GB of RAM (shared with GPU on M2 SoC)

- Metal support for enhanced performance

For Windows users:

- More core CPU

- Substantial RAM

- RTX 30 or 40 series GPU with ample VRAM

Don't worry if you lack a top-tier system. Many RAM and VRAM-friendly options exist, even among the latest models. LM Studio helpfully estimates your system's capabilities as you browse and download models.

Generally, larger model files and higher resource requirements correlate with improved output quality. While it's best not to push your system to its absolute limits, don't be afraid to aim high within your hardware's capabilities.

Remember: The goal is to balance model performance with your system's resources for the best offline Hugging Face experience.

Recommended Models for Offline Use

When running Hugging Face models offline, choosing the right model is crucial. Through extensive testing, I've found the following models to deliver consistently impressive results for both general chat and coding tasks:

Each of these models offers a unique balance of performance and resource usage, making them excellent choices for offline deployment.

Before proceeding further, it's essential to evaluate these models yourself:

- Download each model in LM Studio

- Use the AI chat tab to interact with the model

- Test its performance on various tasks, especially coding if that's your focus

- Assess the quality of the outputs and how well they align with your needs

Remember, your experience may differ based on your specific use case and hardware capabilities. Take the time to find the model that best suits your requirements. This careful selection process will ensure you have the most effective offline Hugging Face model for your needs before moving on to integration and more advanced usage.

Local Server Into LM Studio

Launching your offline Hugging Face model server is very straightforward:

- Open LM Studio

- Select one of your downloaded models

- Click the Start Server button

That's it! The default settings are generally suitable for most users. However, if you're looking to fine-tune your experience, feel free to adjust the options to match your specific needs or preferences.

Using Continue Extension

From the launched VS code studio, navigate to the extensions view. In the search bar, type "Continue". Find the correct extension, and install it.

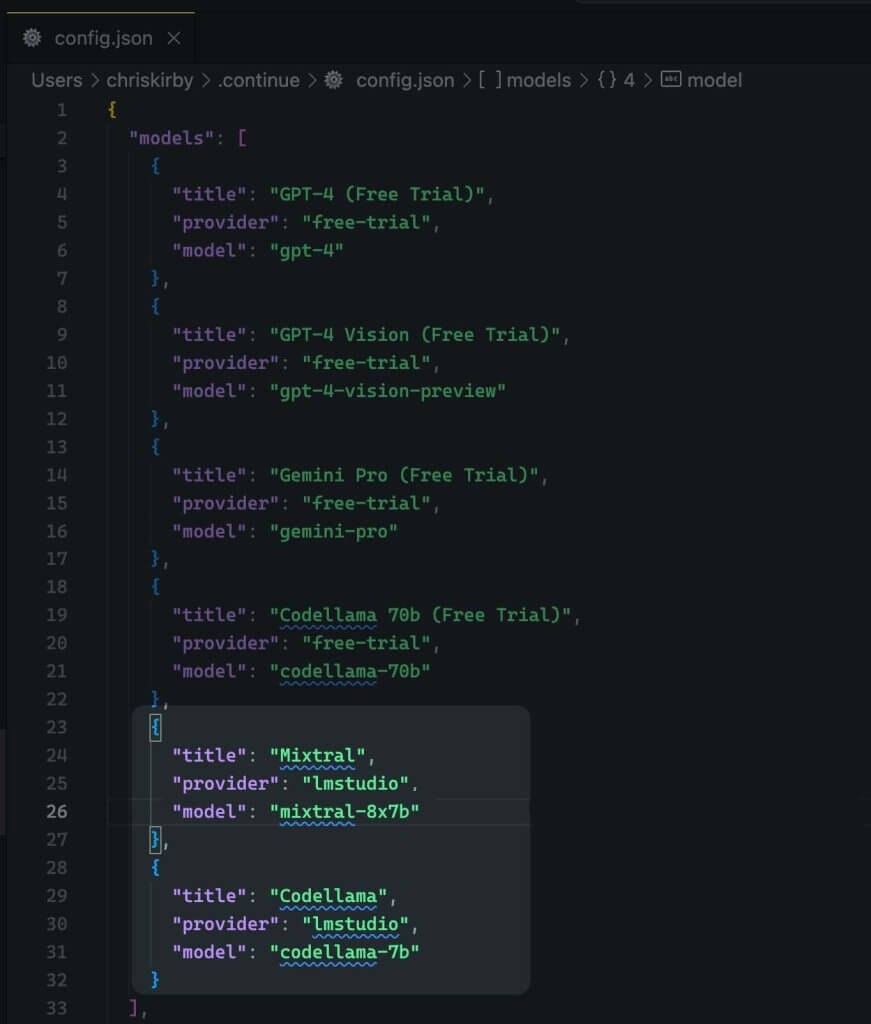

Now we need to change the configuration JSON of the extension. It will add the LM Studio support.

{

"title": "Mixtral",

"provider": "lmstudio",

"model": "mixtral-8x7b"

}

Now, save it.

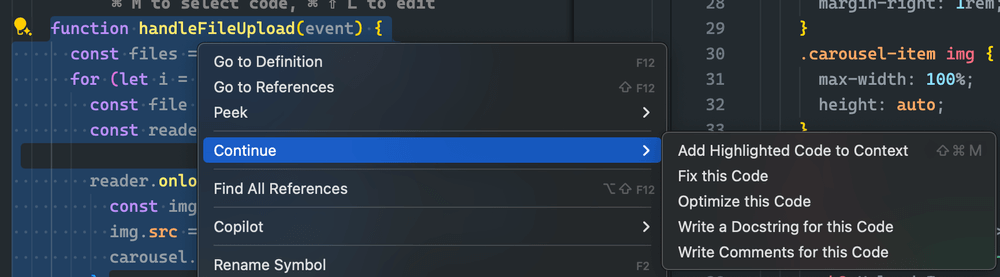

Next, you need to use this local model instead of VS code.

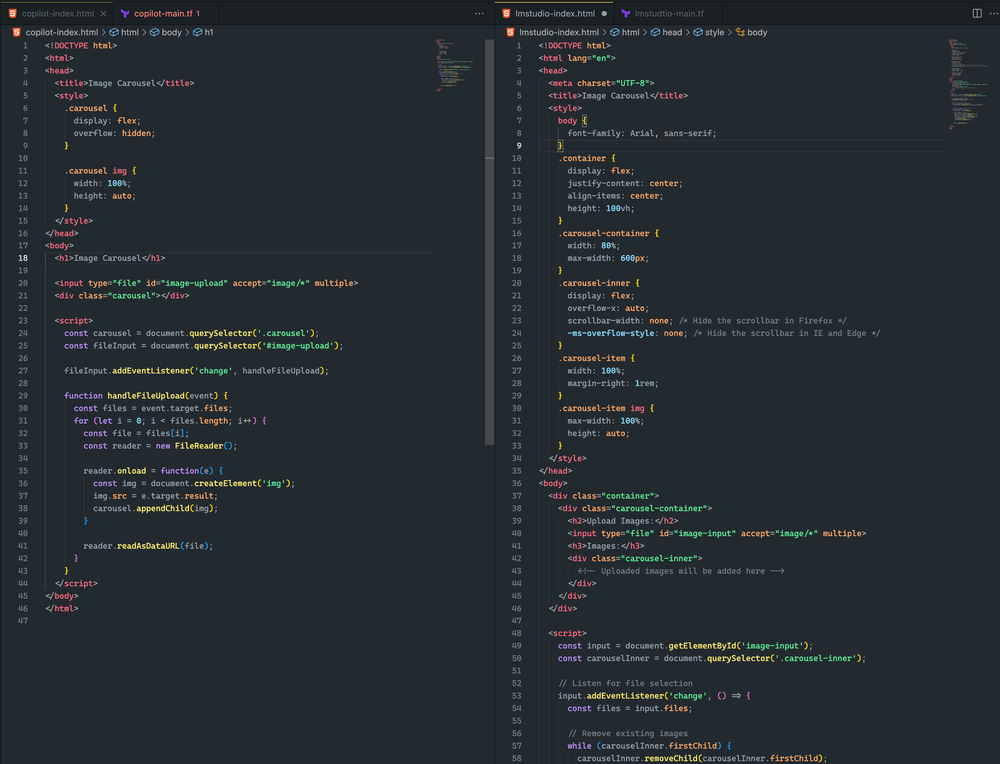

Evaluating Offline vs. Online Model Performance

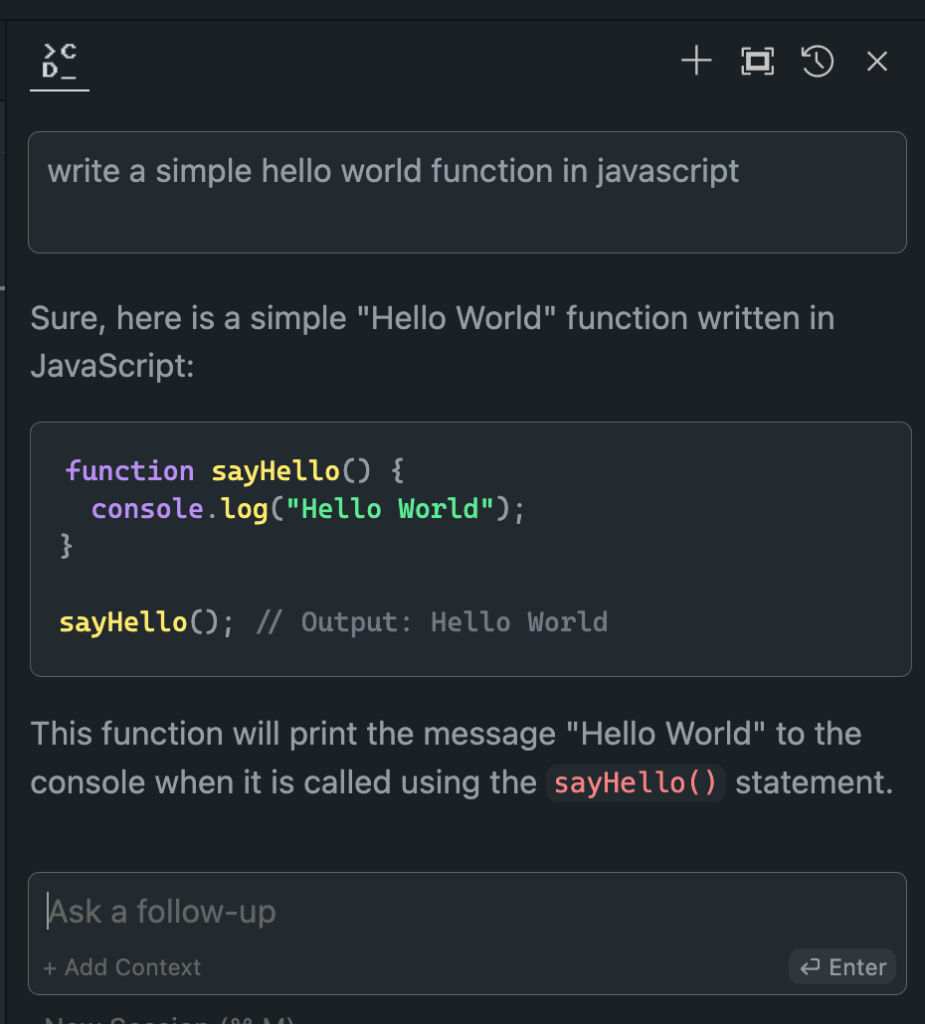

Now that we've set up our offline Hugging Face model, it's time for the moment of truth. Let's put it to the test against its online counterparts!

In my extensive trials, the results have been eye-opening:

- Performance: The offline model often matches, and in some cases, even surpasses its online equivalents.

- Speed: Without network latency, responses can be lightning-fast.

- Consistency: No more variability due to server load or connection issues.

While your experience may vary depending on your chosen model and hardware, don't be surprised if you find yourself preferring the offline version. The combination of local processing power and fine-tuned models can deliver impressive results.

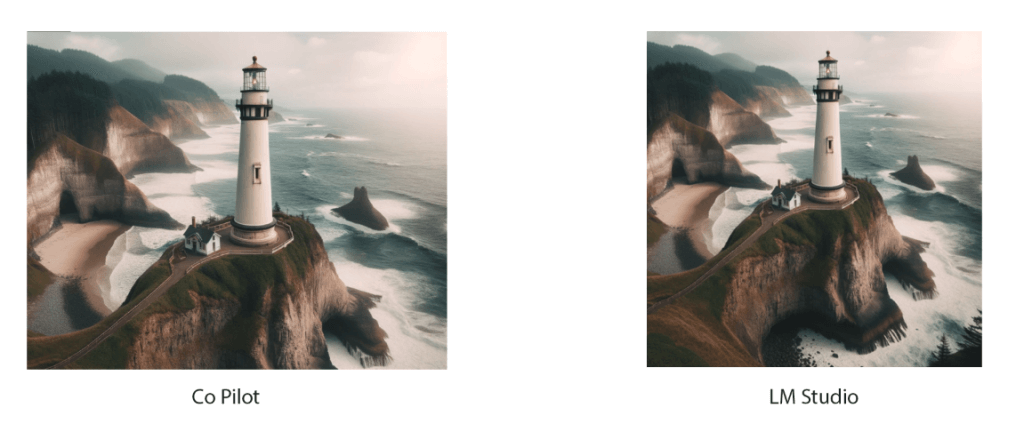

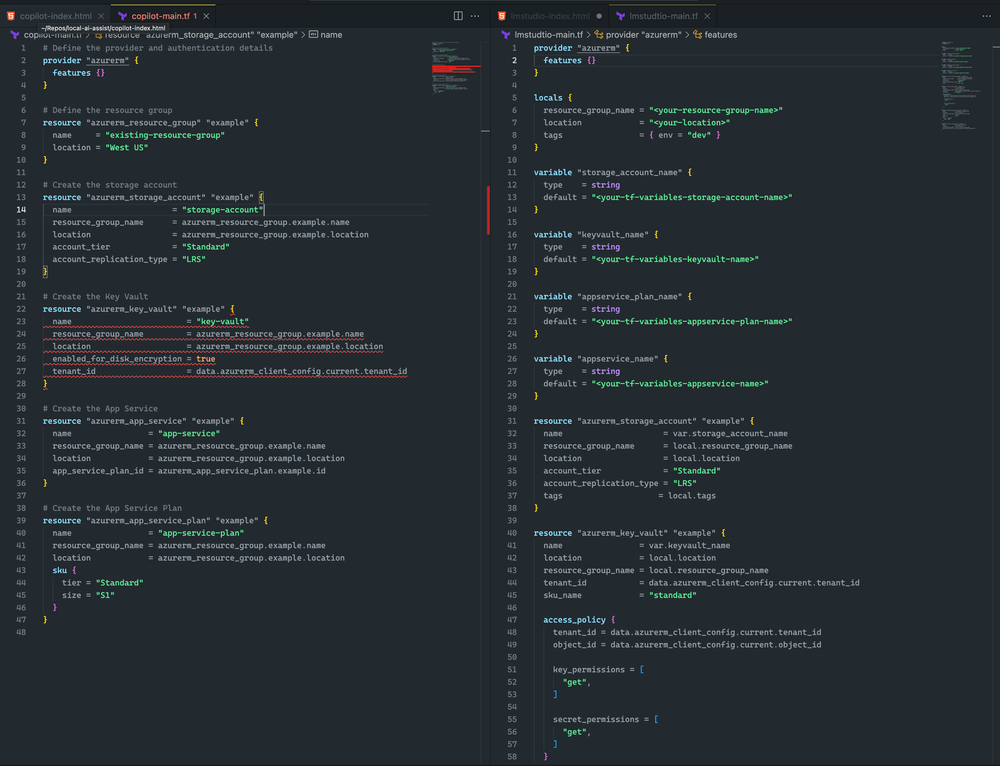

Here see the difference.

Both approaches were quite close. Although neither resulted in an image carousel, I successfully uploaded an image, and it rendered in both examples. With further prompt adjustments, I believe I can achieve an even better outcome

I lean toward LM Studio and Mixtral because Terraform is more complete and has no syntax errors. However, CoPilot does have a minor issue: the key vault declaration is missing the sku_name, which can be easily fixed.

Wrapping Up

Remember, the field of AI is rapidly evolving. By mastering offline deployment of Hugging Face models, you're not just keeping pace – you're staying ahead of the curve. Whether you're a developer, researcher, or AI enthusiast, this skill empowers you to push the boundaries of what's possible with local AI processing.

So, take the plunge. Experiment with different models, fine-tune your setup, and integrate these offline powerhouses into your workflow. You might just find that the future of AI development is right there on your local machine, waiting to be unleashed.