Sudden encounters with 404 errors can be frustrating and disruptive. This issue becomes even more complex when URLs end in /1000, an unknown Google bug that can impact user experience and SEO rankings.

To effectively manage and resolve these 404 errors, it is crucial to have a solid understanding of the root causes and best practices for addressing them. This ultimate guide will provide you with the tools and strategies needed to navigate and overcome the challenges presented by 404 errors on URLs ending in /1000.

What are 404 errors?

A 404 error, also known as a "Page Not Found" error, is an HTTP status code that indicates that the requested resource (such as a web page, file, image, or other content) could not be found on the server.

When a user tries to access a URL (Uniform Resource Locator) that does not exist or has been moved or removed from the server, the web server responds with a 404 error code.

Create Amazing Websites

With the best free page builder Elementor

Start Now

This error can occur for various reasons, which are mentioned below. However, when a user encounters a 404 error, they typically see a standard error page displayed by the web server, indicating that the requested resource could not be found. Well-designed websites often provide custom 404 error pages with helpful information or suggestions to guide the user back to the intended content or the website's homepage.

404 Errors: Natural vs. Bug

Natural 404 Errors

Natural 404 errors are those that occur as a result of human interaction with a website's content. These errors are typically caused by user actions, such as:

Broken Links: When a user clicks on a link that points to a non-existent or moved resource, a natural 404 error will occur. This can happen due to outdated links, typos, or content reorganization on the website.

Mistyped URLs: If a user manually types an incorrect URL in the browser's address bar, either due to a typo or a misremembered URL, the server will respond with a 404 error because it cannot find the requested resource.

Deleted or Moved Content: When website owners delete or move content, any links or URLs pointing to the old location will result in natural 404 errors for users trying to access that content.

User Errors: In some cases, users may inadvertently cause 404 errors by manipulating URLs or trying to access resources they are not authorized to view.

Natural 404 errors are expected and common occurrences on websites, as they are an inherent part of human interaction with the internet.

Bug 404 Errors

Bug 404 errors, on the other hand, are caused by automated processes or bots trying to access a website's resources. These errors can occur due to various reasons:

Crawling and Indexing: Search engine bots and other web crawlers may encounter 404 errors when trying to index or crawl a website's content. This can happen if the website has broken links, outdated sitemaps, or incorrect server configurations.

Malicious Bots: Some bots may attempt to access non-existent resources on a website, either for malicious purposes (such as trying to find vulnerabilities) or due to faulty programming.

Integration Issues: If a website integrates with external services or APIs, bugs or misconfiguration in the integration process can lead to bots or scripts attempting to access non-existent resources, resulting in 404 errors.

Coding Errors: Developers may inadvertently introduce bugs in their code that cause scripts or processes to access invalid URLs or resources, leading to bug 404 errors.

Unlike natural 404 errors, bug 404 errors are often unintended and can indicate issues with the website's code, configuration, or external integrations. These errors should be investigated and addressed promptly to ensure the website's proper functioning and security.

Handling 404 Errors

Regardless of whether the 404 error is natural or a bug, website owners and developers need to handle it appropriately. This can include:

- Implementing custom 404 error pages to provide a better user experience

- Analyzing server logs and error reports to identify and fix the root causes of 404 errors

- Implementing redirects or updating links to point to the correct resources

- Improving website structure and navigation to reduce the likelihood of users encountering 404 errors

By properly managing and addressing 404 errors, websites can ensure a smoother user experience, maintain their search engine rankings, and improve overall functionality and security.

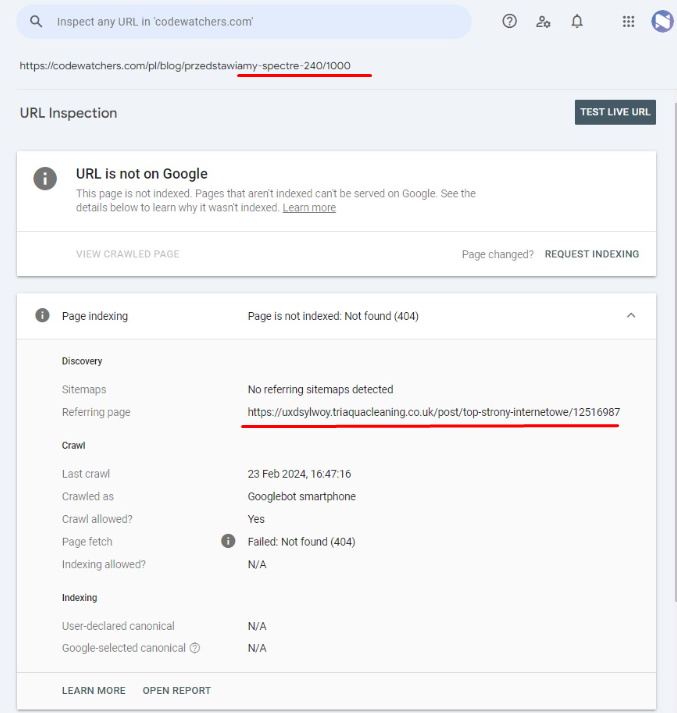

GSC 404 Error Report For /1000 URLs

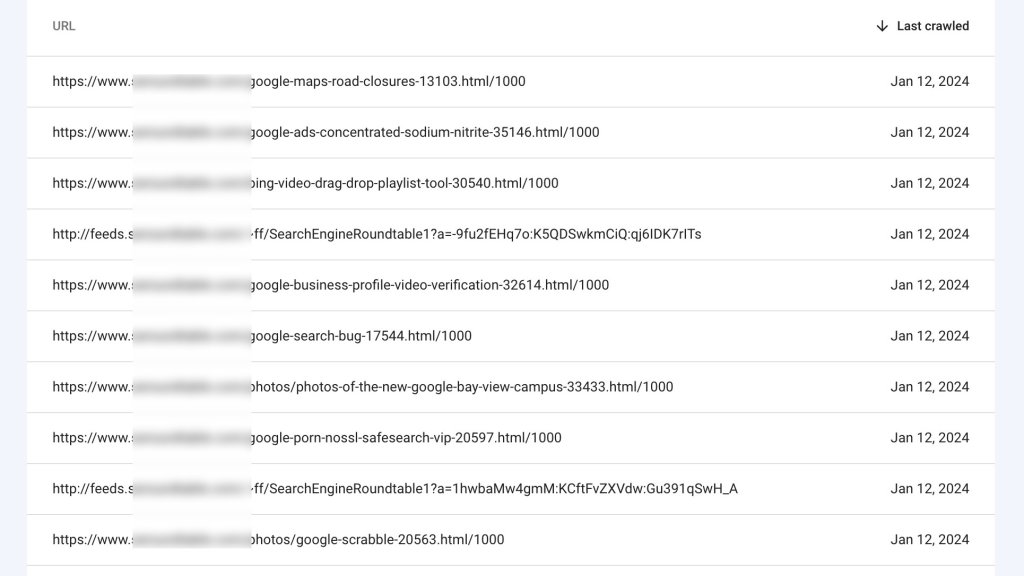

Google Search Console (GSC) has been reporting an unusual surge in 404 errors for URLs that end with "/1000". These errors are surfacing for URLs that do not exist on the respective websites, leading to confusion and concern among website owners and SEOs.

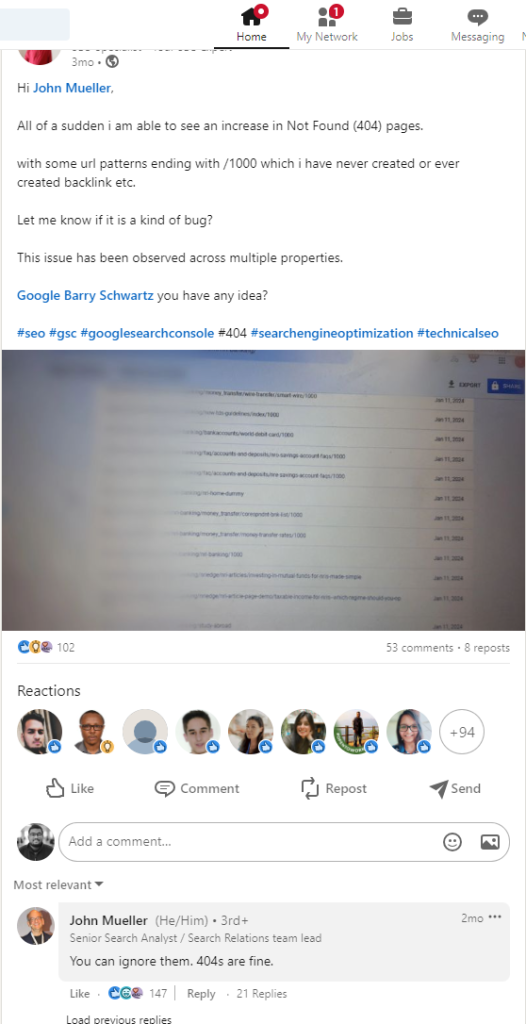

According to a thread in Google support, this is an unusual issue that is mainly generated by GoogleBot and started at the beginning of 2024.

The issue appears to be related to a spammy link network that is attempting to generate fake links by appending "/1000" to legitimate URLs.

Moreover, these links are being referred from an external spammy website which is reducing the credibility of websites. The worst part is - as an owner of a website, you have no control over this.

When Google's crawlers try to access these modified URLs, they correctly return a 404 (Page Not Found) error, which is then reflected in the GSC reports.

However, it's important to note that these 404 errors do not have any negative impact on a website's search engine rankings or performance. Google has acknowledged this issue and has advised website owners to simply ignore these 404 error reports for URLs ending with "/1000".

Possible Reason for /1000 Error

There are a couple of reasons why you might be seeing these errors, and it's worth investigating to rule out any potential issues on your end:

- Check if you have made any new additions, installations, updates, or configuration changes to your website recently. Sometimes, these changes can inadvertently introduce broken links or server configuration issues that lead to 404 errors.

- Review your website server logs for any new or unusual activities that could be causing these errors. Look for patterns or anomalies that might indicate a security breach or other issues.

- In Google Search Console, examine the crawl errors and indexing reports for any patterns in the 404 errors. This can help you identify if the errors are limited to a specific section of your website or if they are more widespread.

- Consider using the robots.txt file to disallow the crawling of URLs with the "/1000" pattern. While this won't directly address the issue, it can help reduce the number of 404 error reports you receive for these specific URLs.

What Do Experts Say About This?

Here are some key points regarding this issue based on comments we have found on Reddit and LinkedIn:

- No Action Required: Google has confirmed that these 404 errors can be safely ignored, as they do not affect a website's SEO or rankings.

- Spammy Link Network: The "/1000" URLs are likely generated by a spammy link network that is attempting to create fake links to manipulate search engine rankings. However, Google's algorithms are designed to detect and disregard such spammy tactics.

- Accurate 404 Reports: While the reported URLs do not exist on the websites, the 404 errors reported by GSC are accurate, as these modified URLs return a 404 (Page Not Found) status code when crawled.

- No Need for Disavow or Redirection: Google has advised against taking actions such as disavowing the links or setting up redirects for these URLs, as they have no positive or negative impact on a website's SEO.

- Temporary Issue: The surge in these 404 error reports appears to be a temporary phenomenon, and website owners can expect the reports to subside over time as Google's systems adapt to this spammy tactic.

While the influx of 404 error reports for URLs ending with "/1000" may seem concerning, website owners can rest assured that these errors pose no threat to their website's search engine visibility or rankings.

Google has acknowledged the issue and recommends simply ignoring these reports, as they are a byproduct of a spammy link network that Google's algorithms are designed to handle.

Wrapping Up

In summary, the influx of 404 error reports for URLs with a specific pattern (ending in "/1000") is the result of a spammy tactic by bad actors trying to manipulate search rankings.

However, these errors do not negatively impact a website's SEO or performance. Search engines like Google are aware of this issue and have advised website owners to simply ignore these error reports, as their algorithms can handle such spammy activities.

No further action is required from website owners regarding these errors, which are expected to diminish over time as search engines adapt to counter these tactics. Website owners can rest assured that their search visibility and rankings remain unaffected by these errors.